What has September to November 2025 taught us about the future of learning?

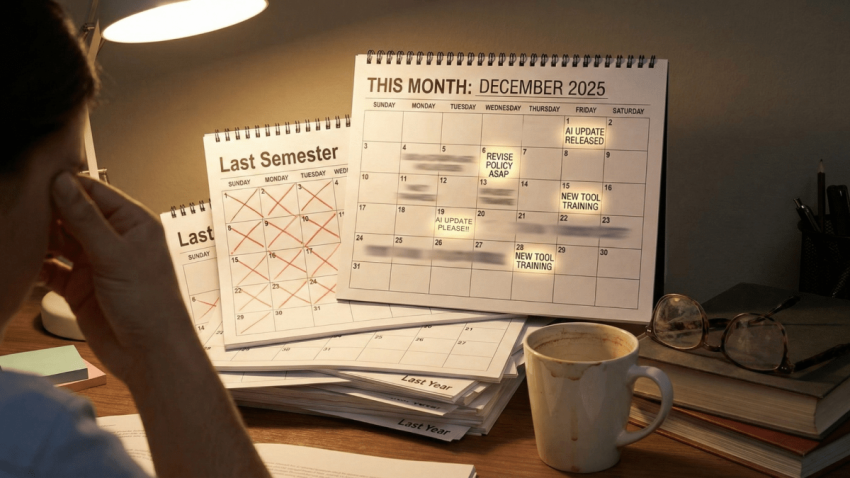

Since September 2025, the AI landscape in education has transformed at a pace that would make your head spin – it certainly has mine. I’ve been tracking these developments across LinkedIn posts, classroom experiments, and late-night deep dives into model capabilities (because apparently that’s what passes for entertainment in my world now). What’s emerged in these three months isn’t just incremental improvement in model capabilities – it’s been more of a fundamental shift in what’s possible with the existing systems, and more importantly, what our students can access with a few taps on their phones.

This isn’t another breathless “AI will revolutionise everything” piece. I’ve written enough of those already and trust me, I’ve learned my lesson about unchecked enthusiasm – the amount of times I’ve said that these capabilities were ‘game changers’ and the amount that the game has changed are a little at odds. Very little has altered in the classroom space.

No, this is a more considered assessment of where we actually are in late 2025, what’s genuinely changed since September, and why that should matter to those of us standing in front of classrooms every day.

The Big Model Updates: What’s Actually Changed

Gemini 3.0: Google’s November Surprise

Google dropped Gemini 3.0 in late November 2025, and it represents what experts are calling the “November Surprise” – a genuinely transformative update rather than incremental tweaking.

The multimodal capabilities are extraordinary when they work. The graphics generation using “Nano Banana 3” (I’m still struggling with that phrase) can create genuinely useful visual content. There has also been a considerably upgrade in Gemini’s coding abilities too. When I tested it for film marketing materials, it produced a decision tree exercise in HTML that worked perfectly, generated powerful infographics, and… then promptly forgot it could generate images at all.

The Jekyll and Hyde Reality: Gemini 3.0 is genuinely brilliant one moment – creating sophisticated code, producing stunning visuals, integrating seamlessly across Google Workspace – and then completely losing the plot the next. It couldn’t read a Google Sheet (a file format owned by Google!), struggled with the first four rows before giving up entirely, and then produced text-heavy graphics with bizarre spelling decisions.

This is typical Gemini: like having Pinky and the Brain show up to help with your lessons. You’re never quite sure which one you’ll get.

Key advancement: Native multimodal capabilities and Google Workspace integration (when it’s reliable enough to use)

ChatGPT 5.1: The Balanced Generalist

Released in November 2025, GPT-5.1 introduces a crucial bifurcation between “Instant” mode (fast, conversational) and “Thinking” mode (deep reasoning with chain-of-thought).

Quick classroom queries can use Instant mode without disrupting lesson flow, while complex planning or analysis can leverage Thinking mode’s deeper capabilities. ChatGPT also maintains the crucial “Memory” feature that Gemini still lacks in 2025, allowing it to remember context across conversations.

AND!!! Yes – there is a free version of ChatGPT for K-12 educators in the US (maybe we’ll get a version for the rest of the world???)

Key advancement: Adaptive intelligence modes and persistent memory capabilities

As an addendum I should say I don’t use ChatGPT – I haven’t really used it for a long time. This is down to a combination of personal preference, my perception of the company and the fact that as a teacher in a Google school, Gemini is our primary platform.

Claude Opus 4.5: The Curriculum Specialist

Claude in all its guises 4.5 continues to excel at safety, reliability, and long-form processing, with particular strength in coding benchmarks – particularly Opus. For my workflow, Claude Projects remains transformative – upload specification documents, sample materials, and subject glossaries once, and Claude maintains that context across all subsequent conversations.

I’ve been using this extensively for GCSE Media Studies planning. Upload the exam board specification, and Claude generates genuinely curriculum-aligned resources without the repeated file uploads that plague other platforms.

Add to this Claude now having a context memory, adaptable skills for repeated tasks and applications and the huge power of the Anthropic model’s coding capacity and Claude remains the heart of my workflows.

Key advancement: Projects feature for persistent curriculum knowledge and coding excellence, Claude skills for repeatable workflows.

The Education-Specific Evolution

Beyond frontier models, education-focused tools have matured significantly:

NotebookLM’s Multimodal Transformation:

- Infographic generation from uploaded sources (genuinely impressive when it works)

- Slide decks using the Gemini image capabilities created from your sources

- Video overviews in multiple visual styles (Watercolour, Anime, Whiteboard, Retro, Heritage)

- Customisable audio overviews with adjustable tone and length

- Expanded source types including images, handwritten notes, Sheets, and Drive URLs – the addition of sheet alone means that the analytical capacities of Notebook are absolutely huge.

Microsoft Teams Education Updates:

- AI-enhanced assignment creation

- Reading Progress with AI-generated passages

- Automated rubric generation (competent, though Claude still edges it)

Microsoft still lags behind its competitors, but the emergent integration of Copilot into the 365 for education could be a really power-up for Microsoft schools.

Canva’s Creative Revolution (September-October 2025):

Now Canva started their Create ‘world tour’ back in April, but there have been some recent additions that have enhanced this already impressive and feature packed platform.

Canva has quietly become indispensable in my workflow, and the autumn 2025 updates have transformed it from “useful design tool” to “comprehensive creative platform”. This matters enormously for educators who’ve historically been priced out of professional design software.

Free Affinity Integration The October integration of the Affinity design suite – previously a £70+ investment – now available free to all Canva users represents a seismic shift. Professional-grade vector, pixel, and layout tools are suddenly accessible to every teacher with a Canva account. No more choosing between spending department budgets on Adobe Creative Suite or making do with basic tools.

AI Enhancements That Actually Matter:

- Smart art style matching: Upload any image and Canva’s AI can now generate additional assets in the same style. For creating cohesive classroom displays or presentation themes, this is genuinely useful

- 3D object generation: Want a 3D model of the solar system for a science presentation? The AI can generate it

- Magic Charts: This is brilliant for data-heavy subjects. Import your data, and Canva suggests the most effective visualisation. For teachers creating resources about statistical analysis or presenting pupil progress data, this removes significant friction

Workflow Features for Teachers:

- AI-powered voiceovers: Text-to-speech that doesn’t sound robotic. Useful for creating accessible materials or flipped classroom videos

- Bulk creation tools: Generate hundreds of personalised certificates, reports, or differentiated worksheets from a single template and data source

- Enhanced video editor: The Video Editor 2.0 update makes creating revision videos or lesson recordings genuinely straightforward – I’ve used this in place of our Screencastify account as it is more accessible and user friendly.

The Education Pricing Reality: Canva offers free accounts for verified educators with access to premium features. Combined with the Affinity integration, this represents professional-grade design capabilities at zero cost – a rare example of EdTech pricing that actually works for schools.

Why This Matters: The democratisation of professional design tools means teachers can create genuinely high-quality resources without specialist training or expensive software. The barrier between “I want to make this” and “I can make this” has effectively collapsed. Whether that’s good for work-life balance is another question entirely (spoiler: it’s not, but at least the resources look nice).

The AI Wrapper Maturation

Quizizz/Wayground, Diffit, MagicSchool and many others – these have shifted from “impressive demonstrations” to “genuinely solve real problems”. The integration feels natural rather than forced.

The Multimodal Revolution

Here’s where things get genuinely interesting (and genuinely concerning). The advancement that matters most isn’t any single feature – it’s the convergence of multimodal capabilities across platforms in late 2025.

What Students Can Actually Do Right Now:

- Photograph homework problems and receive instant solutions (Gauth app – ubiquitous, powerful, no age verification required)

- Upload images for detailed analysis and explanations (Claude, Gemini, ChatGPT all handle this natively now)

- Generate professional infographics from text descriptions (NotebookLM makes this genuinely accessible)

- Create presentations from rough notes (increasingly sophisticated, decreasingly obvious it’s AI-generated)

- Produce video content with AI assistance (accessible entirely on mobile devices)

This isn’t theoretical. This isn’t “coming soon”. This is happening right now, in your students’ pockets, probably while you’re reading this sentence.

The Critical Implication – Shortcutting Thought vs. Amplifying Capability

This brings us to the unavoidable truth I’ve been wrestling with since these capabilities became mainstream in autumn 2025: we’re not just giving students powerful tools; we’re potentially giving them elaborate mechanisms to avoid thinking altogether.

Consider this progression:

Traditional homework challenge: Student struggles with maths problem → applies learned methods → develops problem-solving skills through productive struggle

Current AI-enabled shortcut: Student photographs problem → receives instant solution → copies answer → learns nothing

The tool hasn’t made learning more accessible; it’s made the appearance of learning more accessible. And that’s a crucial distinction we need to grapple with.

But It’s Not That Simple (It Never Is)

The same multimodal capabilities that enable thoughtless shortcutting also enable:

- Visual learners to engage with text in ways that suit their cognitive processing

- Students with processing difficulties to access content at appropriate levels

- Creative applications that weren’t previously possible without specialist software or extensive training

- Differentiation at genuine scale that individual teachers simply cannot provide

A tool that can present information simultaneously via text, image, audio, and video fundamentally changes what’s possible for students who struggle with traditional text-heavy approaches. The question isn’t whether these capabilities are good or bad. They’re both, simultaneously, depending entirely on how they’re deployed and whether we’re teaching critical engagement alongside access.

What This Means for Classroom Practice: Three Truths

Truth One: Students Already Have Full Access

We can pretend they don’t. We can ban phones and block websites and create elaborate assessment conditions. But unless you’re conducting all learning in examination halls under supervision, students have complete access to these tools outside your classroom. They’re using them for homework. They’re using them for coursework (where it’s allowed – and sometimes where it isn’t).

The question isn’t “how do we stop them?” It’s “how do we teach them to use these tools as learning partners rather than thinking replacements?”

Truth Two: Our Assessment System Was Already Broken (AI Just Made It Obvious)

I’ve written extensively about this elsewhere, but it bears repeating: if an AI can pass your assessment by producing the same outputs your students produce, you’re not assessing learning – you’re assessing the ability to produce specific formats that AI excels at replicating.

The Extended Project Qualification report can be largely automated through Perplexity or Gemini. GCSE essay responses can be generated with well-crafted prompts. The fact that this is possible doesn’t mean AI is evil – it means our assessment system was already measuring the wrong things, and AI developments in 2025 have simply made that blindingly obvious. Fortunately the majority of the EPQ is marked on the process through the learning journal and presentation, but still – we need to address the implications.

Truth Three: Integration Is Inevitable (Whether We Like It or Not)

Google is integrating Gemini directly into Classroom. Microsoft is weaving AI throughout Teams. Apple Intelligence is now embedded in iPadOS. These aren’t optional add-ons anymore – they’re becoming core functionality of the platforms our schools rely on. We can be thoughtful about that integration, or we can be dragged along reluctantly. But we cannot stop it.

(And yes, I have extensive thoughts about the ethics of tech companies embedding their AI into educational platforms without meaningful consent or understanding from schools. That’s several blog posts waiting to happen.)

Practical Responses: What I’m Actually Doing

Rather than wringing hands about what AI might enable, here’s what I’m actively implementing in November 2025:

In Planning:

- Using Claude Projects for curriculum-aligned resource generation

- Leveraging NotebookLM for differentiated materials (when the infographics don’t produce spelling errors) and helping me unpick complex topics and concepts for myself

- Employing Gemini for adaptive reading materials, differentiated resources and enhanced content creation.

- Testing Gemini’s multi-modal and coding capabilities whilst maintaining backup workflows for when it inevitably loses the plot

In Teaching:

- Explicitly teaching students about AI capabilities and limitations where it is relevant and appropriate (post 16 only at the moment, but we’re looking closely at this).

- Creating assignments that require process documentation, not just polished products

- Using AI as a thinking partner in classroom contexts where I can observe and guide interaction (currently post 16 and only with approved platforms and parental consent).

- Demonstrating both productive and unproductive AI use.

- Creating AI literacy sessions for key student groups

- Liaising with parents and year teams to bring everyone up to speed

In Assessment:

- Designing tasks where AI can support but not replace actual thinking

- Implementing “show your working” requirements for AI-assisted tasks

- Accepting that traditional assessment approaches are increasingly unfit for purpose and designing for the current context as best I can given the constraints of the system.

In Professional Learning:

- Staying current with model capabilities (hence this extensive analysis)

- Testing tools before student exposure (and discovering their failure modes)

- Maintaining critical distance from both hype and panic

- Documenting what actually works versus what sounds impressive in marketing materials

Looking Forward: November 2025 Is Just the Beginning

The pace of change we’ve witnessed in these three months isn’t slowing – if anything, it’s accelerating. Experts are describing November 2025 as killing the “one chatbot for everything” era, with specialised tools emerging for different cognitive tasks. By the time you read this, there will likely be new capabilities I haven’t mentioned. By the time the current Year 7s reach their GCSEs, the AI landscape will be unrecognisable from today.

That’s not hyperbole. That’s just the trajectory we’re on as 2025 draws to a close.

We can’t predict every change. What matters is developing the professional judgment to evaluate new tools quickly, the pedagogical understanding to deploy them appropriately, and the critical thinking to recognise when we’re using AI to enhance learning versus when we’re using it to simply automate old processes that never shif the pedagogical dial.

The Discomforting Conclusion

Multimodal AI capabilities that can receive information in one format and output in another, that can analyse images and generate infographics, that can shortcut the entire thinking process from problem to solution – these aren’t coming. They’re here. They’re in your students’ pockets. They’re increasingly integrated into the platforms your school has already adopted. Hell, AI can now so flawless recreate what looks like handwritten text in image form that, short of actually watching kids write, we really can’t ever know what they do is purely them.

The only question left as we approach 2026 is whether we’re going to engage with this reality thoughtfully and intentionally, or whether we’re going to pretend it isn’t happening whilst our students navigate it alone.

I know which option serves our students better. The question is whether the education system will allow us to choose it.

What AI developments have you noticed in your classroom since September 2025? How are you navigating the balance between AI as tool and AI as thinking replacement? Let’s continue this conversation in the comments.

#AIinEducation #EdTech #TeacherPerspectives #DigitalLiteracy #EducationalTechnology #AIEthics #UKEducation #AI2025